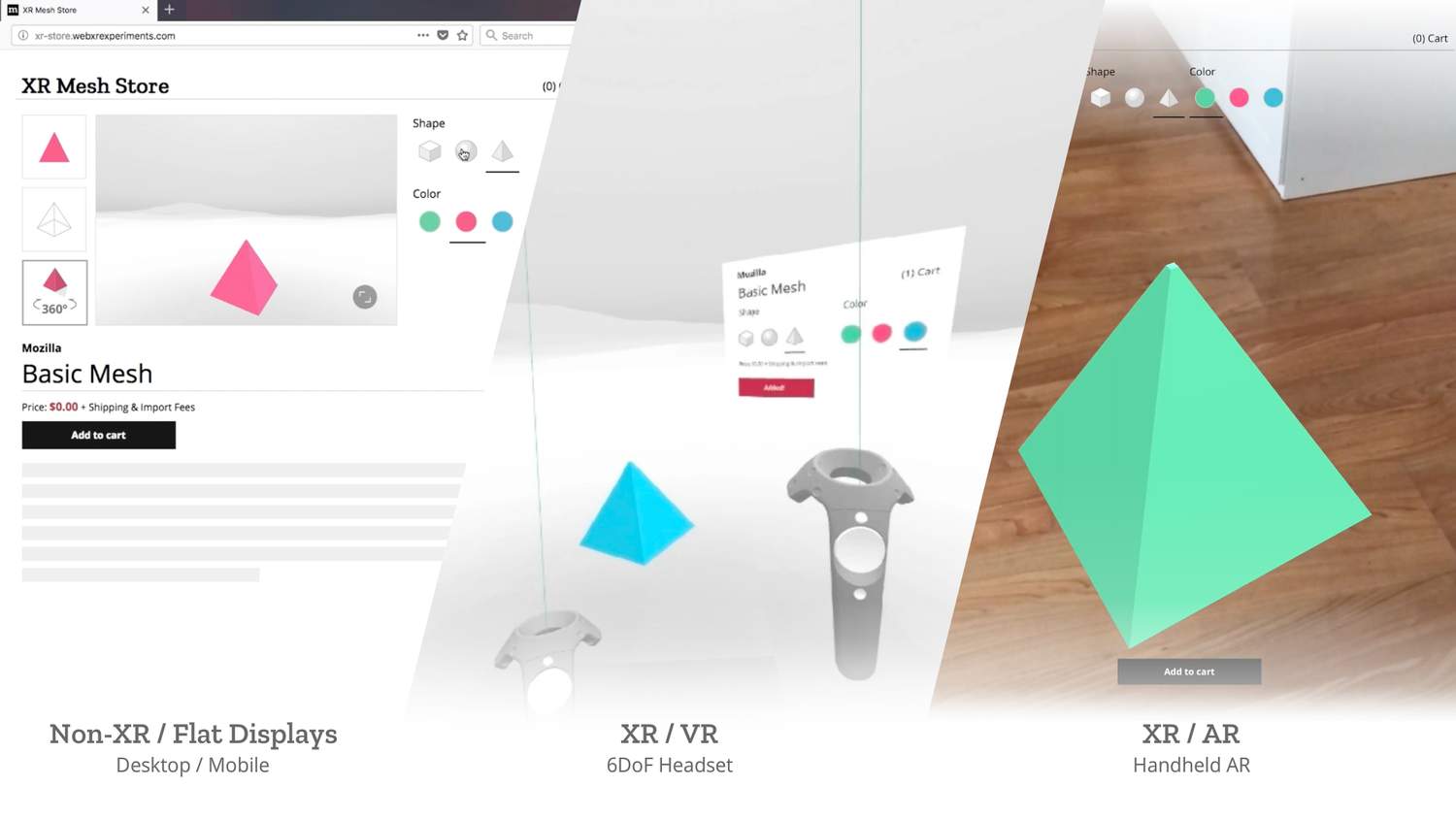

Progressive interfaces

WebXR is amazing: it can be consumed in many ways.You can hook up an bluetooth mouse/keyboard to your smartphone VR, give voice commands, do gestures in the air and so on.

Therefore, SEARXR tries to hint the user upfront with tooltips, regardless of the device:

Disabling controllers based on device (mouse on smartphone) feels logical, however is not empowering people from an accessibility point of view.

Detection

Luckily Aframe has few tricks under its sleeve. We've added a rudimentary touchscreen-detection to it as well:

YOURAPP.ismobile = () => AFRAME.utils.device.isMobile()

YOURAPP.ismobilevr = () => AFRAME.utils.device.isMobileVR()

YOURAPP.isdesktop = () => !YOURAPP.ismobile()

YOURAPP.ismicrophone = (cb) => {

navigator.mediaDevices.getUserMedia({ audio: true })

.then(stream => cb(true) )

.catch( (e) => cb(false) )

}

YOURAPP.istouchscreen = () => {

function detectTouchScreen() {

let hasTouchScreen = false;

if ("maxTouchPoints" in navigator) {

hasTouchScreen = navigator.maxTouchPoints > 0;

} else if ("msMaxTouchPoints" in navigator) {

hasTouchScreen = navigator.msMaxTouchPoints > 0;

} else {

const mQ = window.matchMedia && matchMedia("(pointer:coarse)");

if (mQ && mQ.media === "(pointer:coarse)") {

hasTouchScreen = !!mQ.matches;

} else if ("orientation" in window) {

hasTouchScreen = true; // deprecated, but good fallback

} else {

// Only as a last resort, fall back to user agent sniffing

const UA = navigator.userAgent;

hasTouchScreen =

/\b(BlackBerry|webOS|iPhone|IEMobile)\b/i.test(UA) || /\b(Android|Windows Phone|iPad|iPod)\b/i.test(UA);

}

}

return hasTouchScreen;

}

return /\b(Macintosh|iPad|iPhone)\b/i.test(navigator.userAgent) && detectTouchScreen();

}

In the future SEARXR will try to hint the meaning of buttons for each scenario: